Contents

Web Scraping Definition: Meaning & Key Insights

A small start-up battled a huge crowded market, a tsunami of information online was crushing it. They needed the insights-presents pricing trend, strategies for competitors and customer preference-manually seemed impossible. And then web scraping saved them.

Through the correct web scraping tool, data collection becomes automatic, even mountains of unstructured information transformed into actionable insights overnight. It stopped surviving but instead became thriving-quick and effective decision making.

It’s not their story alone, after all. It could be yours, too. This is about entering the world of web scraping, where the net is your most potent tool.

Now let’s understand,

What is Scraping?

In very simple terms, scraping means collecting data or information from specific sources like web pages or websites and converting them into structured formats like spreadsheets or databases.

This can be done by manually also but it is more time consuming, so you can also use specialized automation tools.

Now you know what does scraping mean.

But here we are talking about webscraping.

So,

What is Web Scraping?

Web scraping definition: Web scraping defines the process of programmatic extraction of data from websites for either analysis, archiving, or other purposes – such as market research or price monitoring or content aggregation.

(This can also be termed as Internet scraping.)

In nutshell, you can scrape website data like, you can scrape info from website, you can also scrape content from website or if you want to scrape database then you can also extract database from website.

Now you are all set.

Let’s learn some spicy things.

How to Scrape Website?

So there are 2 methods to scrape website:

1) With code

2) Without code (Using webscraping tools)

Let’s go with the first one.

With code:

There are 3 most popular methods for scraping websites with coding.

- BeautifulSup

- Scrapy

- Selenium

1. BeautifulSoup

BeautifulSoup is python’s favourite library for getting data out of static web pages using web scraping. Think of it like a tool to scan the web page’s HTML code and to pull out whatever data you need to grab- be that text, links, images, etc. It especially comes in handy for beginners due to the simplicity in how it’s easy to pick up and utilize.

How BeautifulSoup works:

- The beautifulsoup scans the raw HTML or the code that is behind a webpage and lets one search it through simple commands.

- It’ll help you track down part of the page, including headings, paragraphs, or links, by scanning their html tags like <h1>, <p>, <a>.

- After finding that data you’re interested in, you may store them or process them in some way you’d like.

When to use BeautifulSoup:

- Static websites: Content does not change as the user navigates it; for example, no change in loading new data if you scroll or click on buttons.

- Small scraping projects: No need to scrape thousands of pages; a few will do.

Advantages:

- Very simple: Easy to learn for novices.

- Very fast on small projects: Ideal for scraping one page or maybe a couple of pages.

Disadvantages:

- Not for complex websites: This does not handle heavy interactions, like clicking a button or scrolling.

2. Scrapy

Scrapy is a damn powerful framework for web scraping. It is like an all in one set of tools to handle very large-scale projects of scraping.

It can automatically scrape many websites, track links, and crawl many pages at one time.

(Such a power like HULK.)

How scrapy works:

- Scrapy lets you build a script called “spiders” that crawl a website and extract data.

- It can collect data from multiple pages in one go and you can also store these data in multiple formats like CSV, JSON, or a database.

- Having issues such as managing delay between requests, retrying failed connections? Don’t worry, scrapy will take care of all of these things.

When to use scrapy:

- Large scale project: When you need to scrape data from many pages or multiple websites.

- High performance: Scrapy is developed to support high performance, which means it is very helpful in scraping huge data over a short period of time.

Advantages:

- Efficient for large projects: Supports thousands of pages at one go.

- Has more advanced features: Has some built-in tools to handle things such as login cookies and the handling of errors.

- Powerful and fast: Useful for high volume scraping operations.

Disadvantages:

- More complicated to learn: It takes a much longer period to learn in comparison with BeautifulSoup.

- More setup needed: You have to set up a full project before you can scrape.

3. Selenium

Selenium is a browser-automation tool, and it is broadly used in web scraping when data on the website changes dynamically-for instance, after a button click or form fill.

It simulates actions of a real user, like clicking buttons, filling forms, or scrolling, which lets you scrape data from websites that need interaction to load new content.

How selenium works:

- Selenium opens the real web browser (as Chrome or Firefox) and makes it mimic user behaviour; you can write commands that tell Selenium to click on links, fill in forms, scroll, or even wait for pages to load.

- After an interaction with the page, Selenium is capable of extracting data just like every other scraping tool, but can interact with dynamic content that appears only when a user interacts with it.

When to use selenium:

- Dynamical website: Websites whose content unfolds after some action is completed, like filling a form or button click.

- Interactive web: Any kind of interaction between the users can be emulated by it where content of a page may need scraping.

Advantages:

- Handling complex sites: Is very good for web pages containing JavaScript. Requires an active user to move further ahead.

- Versatile: Pretty much every action which a human would take (click, type, and scroll) can be replicated.

Disadvantages:

- Slower than others: Since it opens up a real browser and it acts like a human is using it, it takes a little longer than most methods.

- Uses more resources: Selenium requires a real browser to run, so the computer uses more resources for its use.

Now my Personal Favourite Method:

Without code:

If you are dumb or lazy (means you don’t know coding) – there are a number of automated scraping tools available in the market that let you scrape any website without writing a single line of code.

These tools can be used by anyone with no need for technical backgrounds, and they make it so much easier to collect data from websites – called “Web scraper”.

What Is a Web Scraper?

So the web scraper is a tool or a program designed to extract data from websites.

It is like your personal digital assistant that collects all the website data automatically without any hustle or hard process.

All you need to do is just point and click on the particular data you want, and the web scraper tool handles the rest.

Now you know what is web scraper.

Types of Web Scrapers

These web scrapers come in any size or form that makes the data collection so easy for your life.

Let’s understand them all one by one.

1. Code-based Scrapers

You can also call these as “DIY Scrapers.”

Because they are built using programming languages.

Who should use them?

Only those people who are tech savvy or who love coding and want complete control over how data is scraped.

Example:

Scraping dynamic websites with too many moving parts, such as an e-commerce website.

2. Web Scraper Extension

Little plug-ins for your browser like Chrome that allow you to scrape data straight while surfing

around.

Good examples would be “dataminer.io” or “webscraper.io”.

Who should use them?

Beginners or casual users who want quick results without writing any code.

Example:

Pulling prices, names, or reviews from a few product pages.

3. Point-and-Click Scrapers

The “drag-and-drop” version of web scraping!

These tools enable you to simply click on the data you desire, and the rest is handled for you.

Who should use them?

People who want ease of use, with no code needed.

Example:

Extract business details from yellow pages directories in just minutes.

4. Cloud-Based Scrapers

These run on remote servers.

No installation is required and they work when you sleep!

Examples include Bright Data and Apify.

Who should use them?

People who have big projects or need data regularly over time can choose cloud based web scraping.

Example:

Collecting data from hundreds of websites for market research.

5. API-Based Scrapers

They’re scraping tools, but instead of web pages, they use the APIs that web sites offer directly to access the data in clean format.

Who should use them?

Developers who want safe and legal structured data access

Example:

To pull Instagram posts or Twitter tweets through their APIs.

6. Specialized Scrapers

They’re just for a special platform like LinkedIn or Instagram, which is only targeted scraping.

Who should use them?

Businesses or marketers with very specific needs.

Example:

Collecting LinkedIn leads or analyzing trending hashtags on Instagram.

7. Hybrid Tools

The multitaskers!

These tools combine manual and automated methods for maximum flexibility.

Who should use them?

Anyone tackling tricky websites that require interactions like clicking or scrolling.

Example:

Scraping a travel website where data only appears after selecting dates.

Now the choice is yours, BUT my personal favourite is Point-and-Click Scrapers.

What is a Point-and-Click Scraper?

These are the tools of simplicity. Instead of coding, you just:

- Point to the part of a website you want data from (like product prices or user reviews).

- Click to define what you need.

The real hero of point and click scrapers is ScrapeLead.

ScrapeLead has made this process even easier with an intuitive interface that anyone can use.

Why ScrapeLead is the Best Web Scraping Tool

For Example:

Point-and-Click Simplicity

You don’t have to write code with ScrapeLead. Simply click on what you want from a website.

It is all point-and-click simplicity.

Supports Any Site

ScrapeLead can access static sites as well as heavy JavaScript dynamic ones.

No website is too complicated for ScrapeLead to scrape and extract data that you need.

Free Credits For Life

For life, every month, get 500 free credits.

Good for testing purposes and small-sized projects without long-term commitment.

(That’s why ScrapeLead is the best free web scraper.)

Powerful Automation

ScrapeLead automates heavy scraping, thus saving time when extracting information from multiple pages or sites simultaneously.

Export to Any Format

Would you need the data extracted in CSV, JSON, or even Excel format?

ScrapeLead makes this all easy as well, through integration with your workflow.

Cost-Effective for Everyone

Whether a newcomer or a full-scale professional project manager, ScrapeLead accommodates all, having flexible pricing that suits your every need.

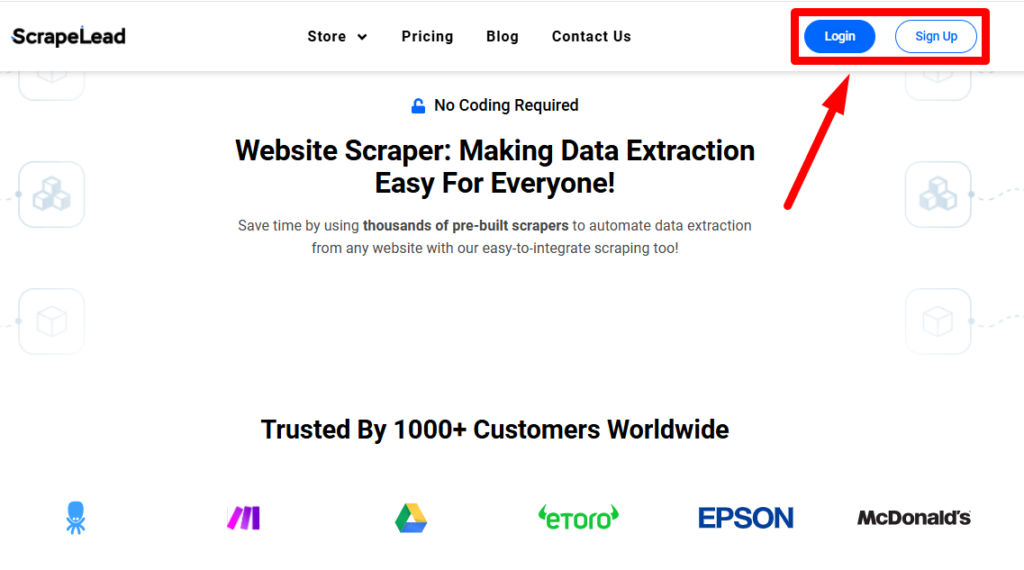

1. Open our website – scrapelead.io

2. Sign up

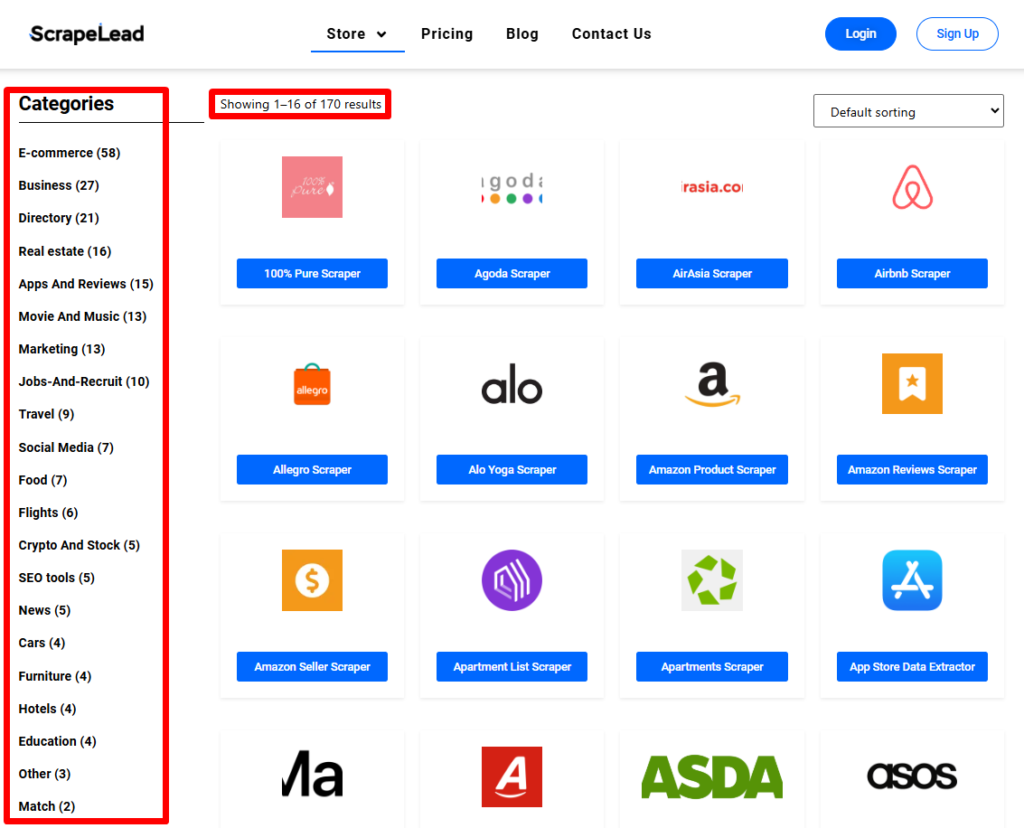

3. Head it towards STORE

4. And you can find there are 170+ products for every category.

(Choose any category as per your need.)

5. Enter the URL.

6. Take a sip of lemonade.

7. Let ScrapeLead extract and organize the data for you.

Who Can Use ScrapeLead?

1. Digital Marketers

Know where your competitors have been, analyze the price competition trend, and detect market trends better.

2. Online Stores

Track product pricing, reviews and competitor catalogs right away to conquer the e-commerce world.

3. Researchers & Analysts

Gather tremendous datasets for studying, reporting or industry analysis effortlessly.

4. Lead Generators

Create awesome contact lists easily with emails along with company information.

5. Social Media Managers

Know your audience, follow trends, and get ideas from social media.

6. Small Businesses

Small businesses can derive valuable insights towards growing their business even without technical skills.

ScrapeLead makes it easy for anyone to scrape data from webpage and then convert it into useful information!

Is Web Scraping Legal?

Web scraping is a little gray when it comes to issues of legality. It really depends on the website rules that you are going to scrape. Keep in mind these things:

- Check Website Rules: You have to check the website terms of service on what is allowed.

- Follow Robots.txt: Most sites will have a robots.txt file which tells you which parts of the site to stay away from while scraping. Respect this always.

- Scrape Responsibly: Avoid overloading the server on a website with requests.

Scraping is usually not an issue as long as information is publicly available. Taking data, personal or copyrighted, can lead to big troubles. Scrapping should be ethical and responsible.

Are Web Scrapers Legal?

Wellll this depends on HOW and WHERE they are used.

How It’s Done:

- Using a scraper in an overload way in relation to making too many requests to the server of a website is illegal since it is somehow harming the functioning of the website itself and may sometimes cause disturbances to the functioning.

- If it goes around security measures or terms of service, for instance, logging in with false credentials or scraping private data, then that’s a big no-no and may get them into legal trouble.

- On the other hand, scraping ethically—for example, just taking publicly available information without damaging the website—is fine. But, it’s always a gray area, and there are exceptions.

Where It’s Applied:

- Public web sites: Since you are web scraping information that is publicly given and does not violate the TOS, in most cases is less risky.

- Private sites or copyrighted materials: Scraping private data such as user profiles, sensitive information, or copyrighted material could be an infringement.

In simple words: Scraping is similar to walking into a public store and clicking pictures of what you can see. But if you break into a place or take pictures of what is hidden, that’s when the law steps in. First of all, check the rules of the site, also called terms of service, to ensure that you’re not crossing the line!

Key Takeaways

Web scraping turns overwhelming floods of data online into manageable streams of insight. Tools such as ScrapeLead eliminate complications, making them accessible to every user, irrespective of their technical skills.

If you are someone trying to gather a few data points or you are a business managing large-scale data scraping, ScrapeLead provides a full, user-friendly solution.

So why wait?

Visit ScrapeLead.io and see how easy it is to unlock the potential of web data.

Start scraping instantly

Sign up now, and get free 500 credits everymonth.

No credit card required!

Related Blog

How to Scrape Target Without Coding?

Quickly extract product data, reviews, and contact info from Target.com with ScrapeLead’s no-code Target Scraper.

Scrape Pinterest Data Without Coding: 2025 Beginner’s Guide

Scrape Pinterest data easily, and take your business and brand’s online presence to the next level.

Top 3 Web Scraping Chrome Extensions for 2025

Top web scraping Chrome extensions like Web Scraper, Instant Data Scraper, and Data Miner make data extraction easy and efficient for all your needs.